Model Context Protocol

and how it could help create interoperable AI Agents for the Enterprise

Agentic AI is exciting for the enterprise. It could end up being what really beds AI down for enterprise organizations. Discrete task oriented workflows infused with AI are in may ways next generation business process automation, except with AI autonomy rather than rigid rule frameworks. This is something the enterprise can understand and get huge value from and plays right into the Agentic Enterprise Vision:

Autonomous Workflows: AI agents can handle complex multi-step processes that previously required human coordination across departments

Context-Aware Decision Making: Agents leverage organizational knowledge to make informed decisions

Tool Orchestration: Rather than isolated point solutions, agents coordinate across multiple systems to complete tasks

However, if you have heard me talk about Agentic before you will have heard me bemoan the lack of standardized integration between different Agentic AI systems and enterprise data sources which could end up being a major barrier to realizing the full potential of agentic AI in business environments.

The risk of vendor lock-in is very real as companies could we end up building dependencies on proprietary connectors and frameworks. Without standard organizations could find themselves with fragmented AI capabilities that can't easily communicate with each other or with their core business systems.

Enter Anthropic's Model Context Protocol. The Model Context Protocol is an open standard designed to create secure, bidirectional connections between AI systems and various data sources. Anthropic wants us to think of MCP as "a USB-C port for AI applications" ie. it standardizes how AI models connect to different data sources and tools, creating a universal interface for context exchange.

Each MCP server can provide a model with a catalog of available tools, resources, and prompts. The model can then initiate additional requests to the server to either retrieve information or execute a specific tool.

At its core, MCP addresses a fundamental limitation of even the most advanced AI models: their isolation from trapped organizational data trapped.

MCP provides a standardized protocol that simplifies how AI systems access the data they need to produce relevant, contextually appropriate responses.

ARCHITECTURE

The MCP architecture follows a straightforward client-server model:

MCP Servers: These expose organizational data from various sources like content repositories, databases, and business tools

MCP Clients: AI-powered applications that connect to these servers to access contextual information

The protocol includes three major components:

The Model Context Protocol specification and SDKs

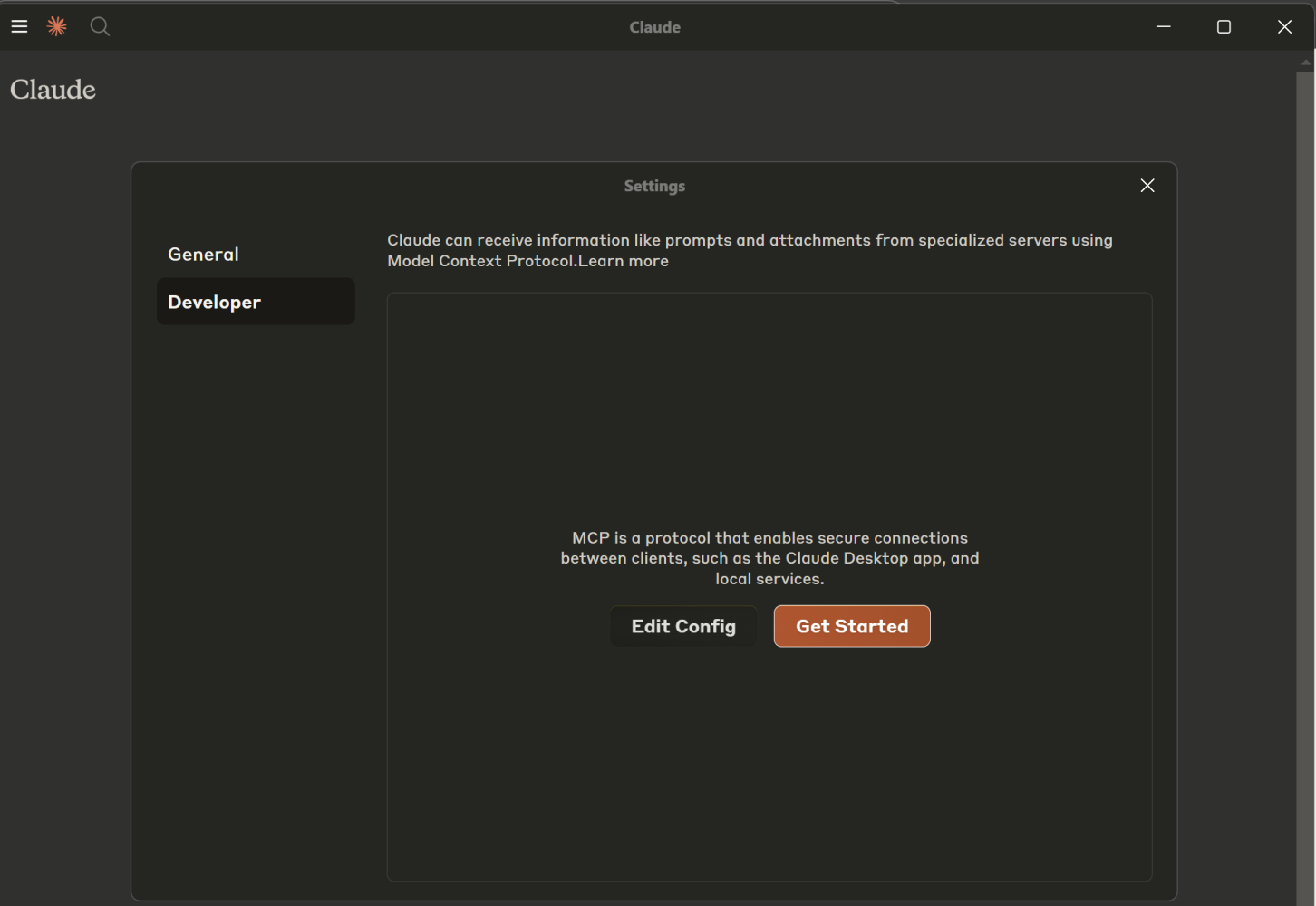

Local MCP server support in Claude Desktop applications

An open-source repository of pre-built MCP servers

GETTING STARTED WITH MCP

Organizations interested in implementing MCP can begin by:

Installing pre-built MCP servers through the Claude Desktop app

Claude Desktop was the first implementation (more information on Claude Desktop Configuration here )

Following the quick start guide to build custom MCP servers

Contributing to open-source repositories of connectors and implementations

The Model Context Protocol provides agents with:

Contextual Awareness: Agents need comprehensive context to make appropriate decisions. MCP enables them to pull relevant information from across enterprise systems, giving them a holistic view comparable to a knowledgeable employee.

System Interoperability: For agents to be truly useful, they must navigate between different enterprise tools and datasets. MCP creates standardized pathways between these systems, allowing agents to operate across organizational boundaries.

Actionable Information: Beyond just retrieving data, agents need to understand its significance and how it relates to their tasks. MCP helps maintain this contextual relationship between information pieces from different sources.

SO WHAT CAN YOU USE IT FOR ?

With MCP as infrastructure, enterprises could think about developing agents that:

Probably the most common use case will be using it to automate operations by interfacing with multiple enterprise systems simultaneously.

Manage knowledge work by synthesizing company domain information across document repositories, email, and communication platforms

Autonomous coding with MCP providing access to repositories, documentation, testing systems, and deployment tools

Support decision-making through comprehensive access to the relevant business context

These are but a few of the use cases that come to top of mind.

A HYPOTHETICAL PRACTICAL EXAMPLE

Let's take a hypothetical example as to how MCP could enable agentic systems in a concrete enterprise scenario:

A global manufacturing company wants to to streamline their product development process. Previously, this workflow involved multiple departments using different systems:

Engineering teams working in CAD software and project management tools

Supply chain accessing inventory and vendor management systems

Finance using ERP systems for budgeting and cost analysis

Compliance referencing regulatory documentation in knowledge bases

Customer insights stored in CRM systems

The Challenge: Coordinating product changes required employees to manually gather information from each system. This results in delays, information gaps, and communication breakdowns.

What an MCP solution could look like: The company could implement MCP servers for each core system:

A Git-based MCP server for engineering documentation

A custom MCP server for their ERP system

MCP connectors for their Postgres-based regulatory database

Integration with their document management system via MCP

The Agentic Workflow would look something like this:

When a product modification is required, an AI agent can now:

Retrieve the original design specifications from engineering systems

Check component availability and lead times from supply chain systems

Calculate cost implications by accessing financial data

Verify regulatory compliance by checking against current regulations

Generate comprehensive change request documentation with all relevant information

Notify stakeholders across departments with contextualized information

This example is a good example of 'agentic' in action. It demonstrates how MCP could be used to enable an agentic system to perform complex cross-functional workflows by easily accessing context from multiple enterprise systems - something that would be very complex to build if you had to have custom integrations for each data source.

Organizations looking to leverage MCP for agentic systems in this way can:

Use Claude Desktop's MCP capabilities to connect Claude to local file systems and tools

Implement pre-built MCP servers for common enterprise tools (Google Drive, Slack, GitHub, etc.)

Develop custom MCP servers for proprietary enterprise systems (not as hard as it sounds, I urge you to check out the reference server Gihub repo)

Create agent workflows that leverage these MCP connections

By providing this standardized interface, MCP can reduces the engineering complexity of building agentic systems that operate within enterprise environments.

A couple of things I have noticed to be aware of while investigating MCP are:

Long-running agents need to maintain state across interactions, which, from what I could see, introduces some challenges with the current MCP implementation:

Persistent Context: While MCP provides access to the data sources, the protocol itself doesn't specify mechanisms for agents to maintain their own persistent state between operations. This would need to be implemented separately.

Session Management: For agents that run continuous processes over extended periods, you'd need to establish session management either within or alongside the MCP infrastructure.

COMPETING STANDARDS AND PROTOCOL LANDSCAPE

There are several other standards and frameworks aim to address the challenge of connecting AI models to enterprise data and tools:

LangChain and LlamaIndex: These frameworks provide programmatic ways to connect LLMs to various data sources and tools. LangChain excels at chaining LLM operations and LlamaIndex specializes in data indexing. MCP can actually provide the enterprise-grade standardization missing in these frameworks ie. MCP connectors can enhance LangChain workflows through interoperability

OpenAI's Function Calling and Assistants API: OpenAI has developed a means for tools integration, but these are tightly coupled to OpenAI's specific models rather than offering a model-agnostic protocol.

Consider the following example:

# OpenAI Function Calling example (vendor-specific)

{

"name": "get_stock_price",

"arguments": {"ticker": "AAPL"}

}

# MCP implementation (standardized)

{

"jsonrpc": "2.0",

"method": "tools/call",

"params": {

"name": "get_current_stock_price",

"arguments": {"company": "AAPL"}

}

} MCP complements rather than replaces Function Calling - it standardizes how different vendors' function calls get executed. This enables multi-LLM architectures ie. where Anthropic's Claude and OpenAI models can share the same tool ecosystem.

NVIDIA NeMo Retriever: This is focused primarily on retrieval-augmented generation, this addresses a subset of the context problem but lacks the broader tool integration capabilities of MCP. You could image NeMo being used with MCP i.e

User Prompt → [LLM with Function Calling] → [MCP Protocol] → [NeMo Retriever/RAG] → [Enterprise APIs]Enterprise API Gateways: Traditional API management platforms are extending their capabilities to include AI model integration, though these typically focus on controlled access rather than contextual understanding. It is worth noting however that emerging AI gateways such as Kong's are starting to bridge this gap by adding LLM-specific features

Google Vertex AI Agent Engine: Vertex AI Agent Engine and the Model Context Protocol (MCP) take fundamentally different approaches to openness and interoperability in AI agent development. MCP is a specification, whereas Vertex AI Agent Engine is a managed product. This distinction matters IMO, but Google’s Vertex AI Agent Engine represents a middle ground – more open than proprietary alternatives but less open than MCP’s pure protocol approach. For enterprises needing production-ready solutions, Vertex AI Agent Engine can offer pragmatic agentic openness within Google’s ecosystem.

MCP differentiates itself through its open standard approach, model-agnostic design, and focus on bidirectional context flow between AI systems and data sources. Unlike frameworks that require developer expertise, MCP aims to provide more of a "plug-and-play" connectivity that can scale across enterprise environments.

In summary, without doubt, as far as Agentic AI is concerned, interoperability challenges represent an "elephant in the room" for enterprise adoption, but open interoperability standards such MCP offer a potential path forward that can avoid the pitfalls of vendor lock-in. The practical adoption of MCP will likely depend on how well it can balance the need for standardization with the flexibility required by diverse landscape of enterprise systems.